InstaSynth

Listen to Your ColorsDate: May 2017

Categories: Audiovisual Performance, Data Art, Visualization, Sonification

Categories: Audiovisual Performance, Data Art, Visualization, Sonification

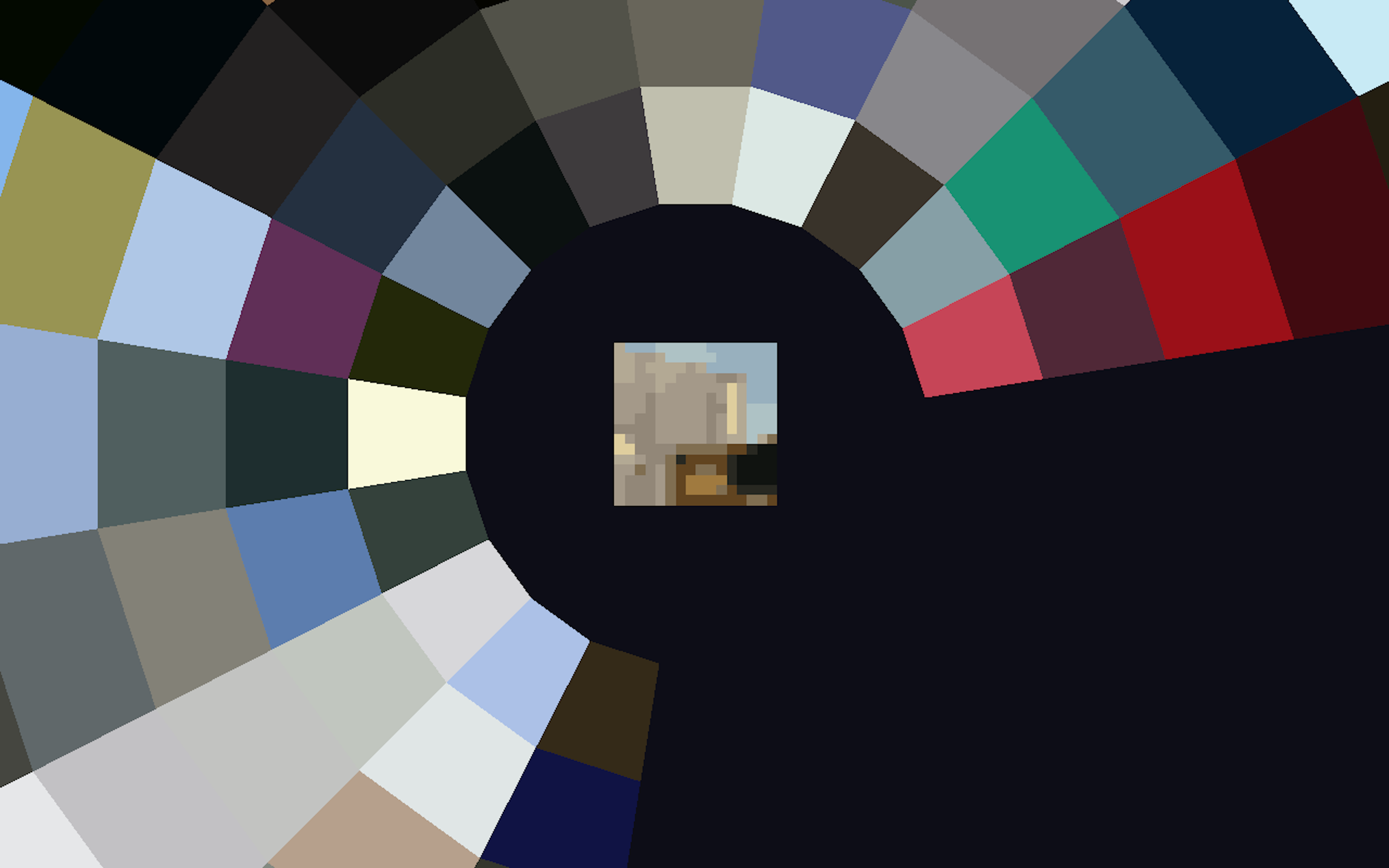

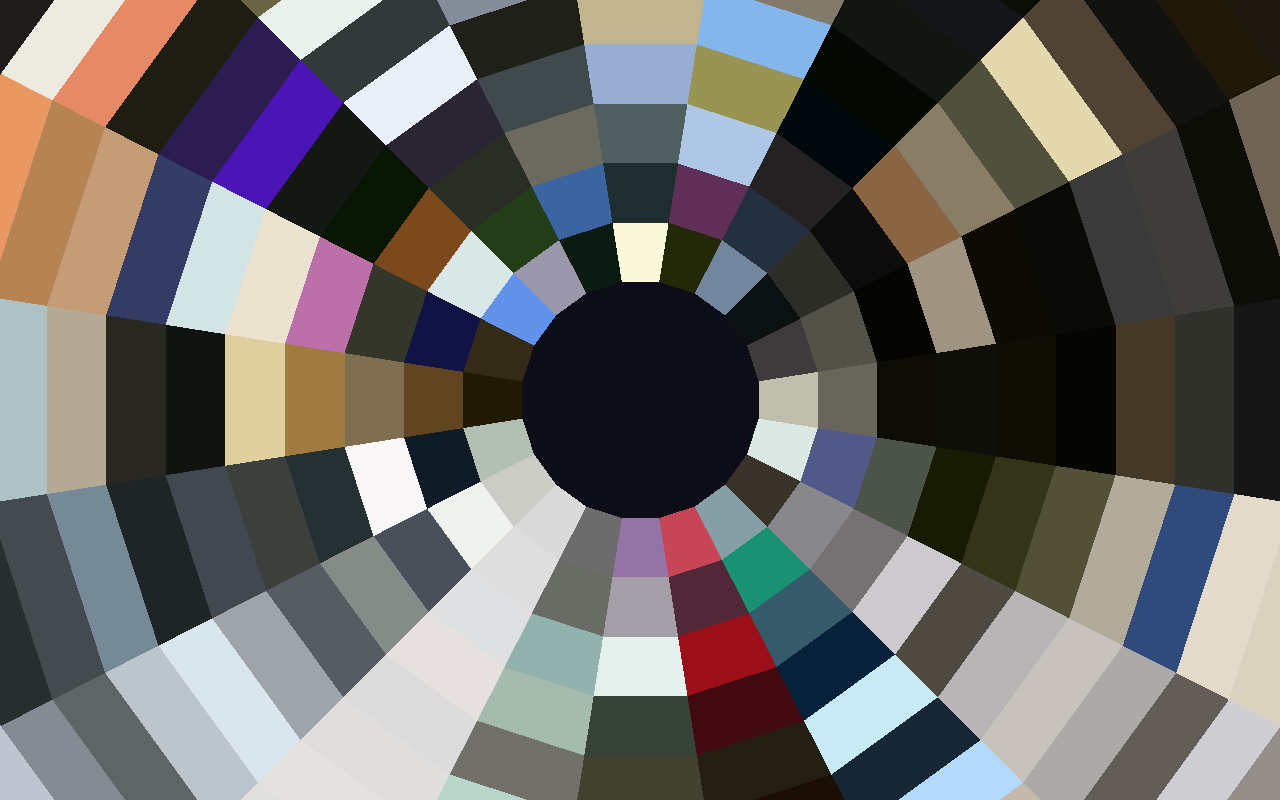

InstaSynth is an image-based sonification project that transforms personal images on Instagram into a unique audiovisual piece. After gathering recent 20 photos from my Instagram account via the Instagram API, InstaSynth sequentially shows each image while extracting the 12 dominant colors of the image and pixelating the image based on the dominant colors. The pixelated image is fragmented and dropped into a rotating transparent hemispheric structure in the background, which is comprised of 20 vertical bars. Each bar has 12 sections that are filled with the dominant colors of an image. As a result, InstaSynth generates 20 images’ hemispheric color palette in three-dimensional space. Each colored bar creates a sound based on an additive synthesis technique. The hue values of a bar's 12 colors are mapped onto the frequencies of 12 sine oscillators respectively. Each oscillator's amplitude is determined by a mapped color's brightness value and the dominant degree of the color. The generated sound is also spatialized by the bar's position. InstaSynth triggers each bar's sound according to BPM (beats per minute). As a result, the hemispheric color palette is used as a color-based sound synthesis instrument. This project attempts to give people a novel artistic experience by making abstract visuals and sound from real images.

AlloSphere Demonstration at the MAT 2017 End of Year Show: Re-habituation

This piece was made and presented for the AlloSphere in UCSB, which is a three-story facility and is used to represent large and complex data, including immersive visualization, sonification, and interactivity with multiple modalities.