Uncertain Facing

Date: Jun 2020

Categories: AI Art, Data Art, Audiovisual Installation, Generative

Categories: AI Art, Data Art, Audiovisual Installation, Generative

Uncertain Facing is a data-driven, interactive audiovisual installation that aims to represent the uncertainty of data points of which their positions in 3D space are estimated by machine learning techniques. It also tries to raise concerns about the possibility of the unintended use of machine learning with synthetic/fake data.

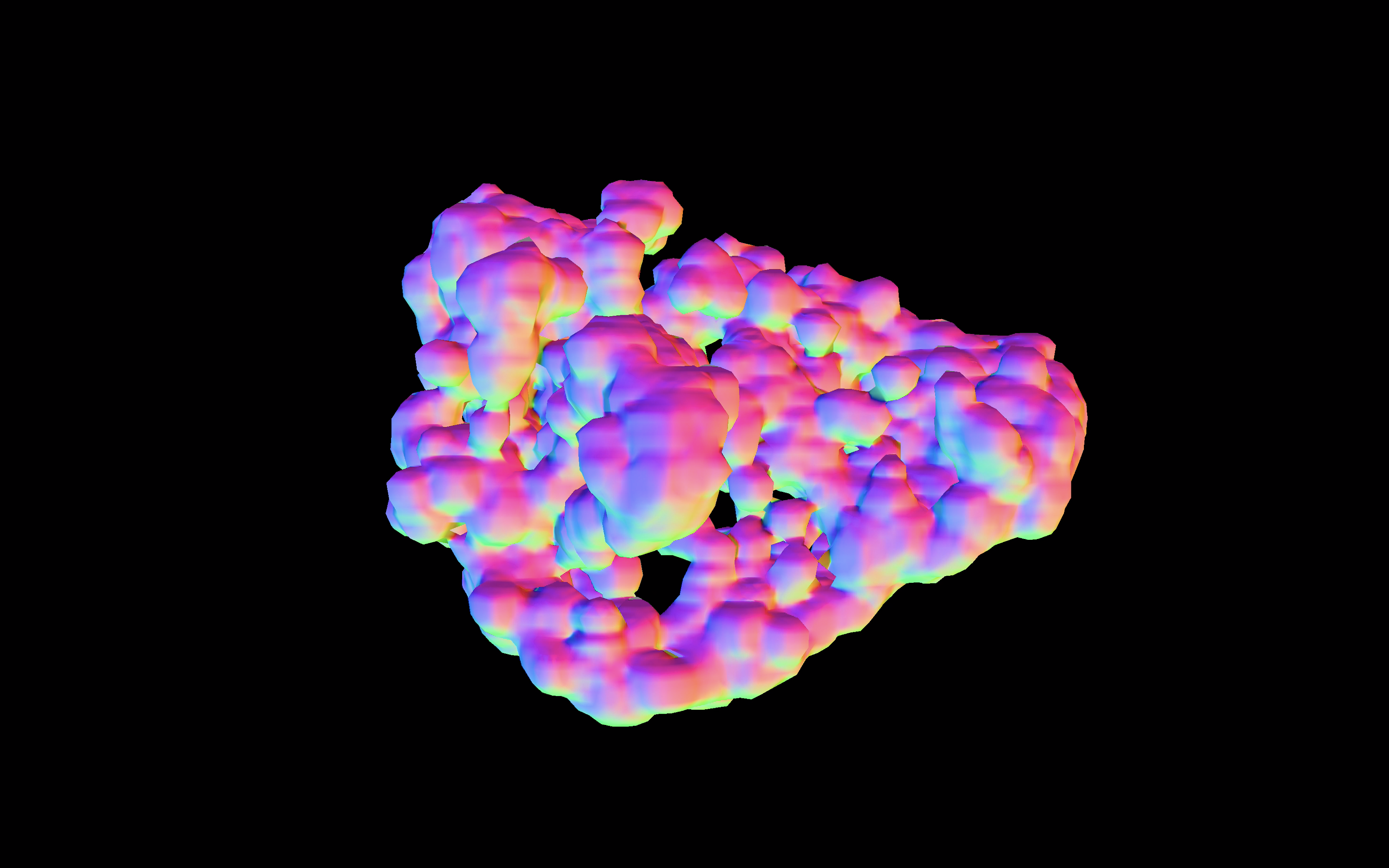

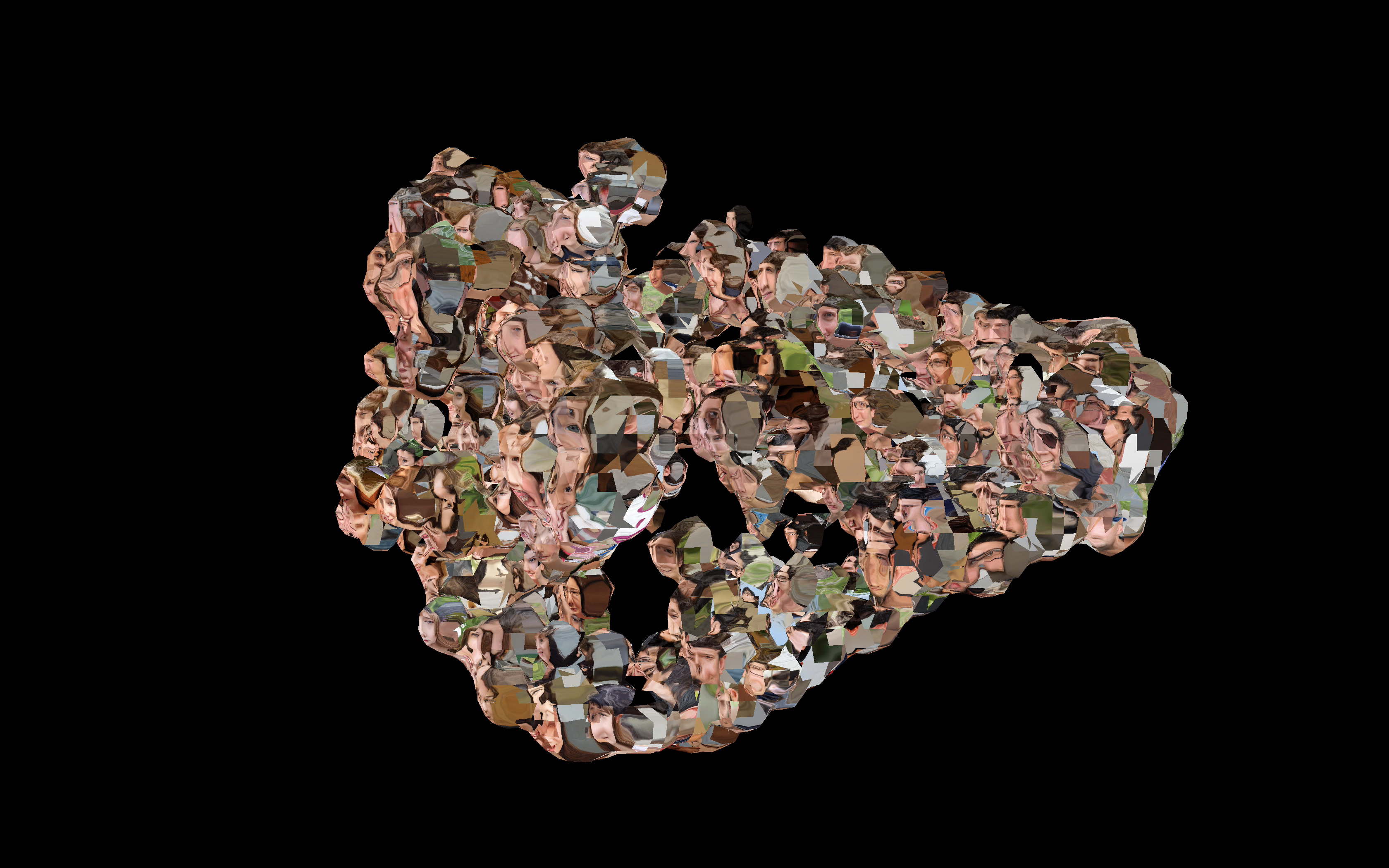

As a data-driven audiovisual piece, Uncertain Facing consists of three major components: 1) face data including synthetic images of faces, generated by StyleGAN2, a generative adversarial network (GAN) for generating portraits of fake human faces, and their face embeddings obtained from FaceNet, a deep neural network trained for finding 128-dimensional feature vectors from face images, 2) t-SNE (t-distributed Stochastic Neighbor Embedding), a non-linear dimensionality reduction technique for the visualization of high-dimensional datasets, and 3) multimodal data representation based on metaball rendering, an implicit surface modeling technique in computer graphics, and granular sound synthesis

Uncertain Facing visualizes the real-time clustering of fake faces in 3D space through t-SNE with face embeddings of the faces. This clustering reveals which faces are similar to each other by deducing a 3-dimensional map from 128 dimensions with an assumption based on a probability distribution over data points. However, unlike the original purpose of t-SNE that is meant to be used in an objective data exploration in machine learning, it represents data points as metaballs to reflect the uncertain and probabilistic nature of data locations the algorithm yields. Face images are mapped to surfaces of metaballs as textures, and if more than two data points are getting closer, their faces begin to merge, creating a fragmented, combined face as a means of an abstract, probabilistic representation of data as opposed to exactness that we expect from the use of scientific visualizations. Uncertain Facing also reflects an error value, which t-SNE measures at each iteration between a distribution in original high dimensions and a deduced low-dimensional distribution, as the jittery motion of data points.

Along with the t-SNE and metaball-based visualization, Uncertain Facing sonifies the change of the overall data distribution in 3D space based on a granular sound synthesis technique. The data space is divided into eight subspaces and the locations of data points in the subspaces are tracked during the t-SNE operation. The density of data points in each subspace contributes to parameters of granular synthesis, for example, grain density. The error values also are used in determining the frequency range and duration of sound grains to represent the uncertainty of data in sound.

As an interactive installation, Uncertain Facing allows the audience to explore the data in detail or their overall structure through a web-based UI on iPad. Moreover, the audience can take a picture of their face and send it to the data space to see its relationship with the data. Given the new face image, Uncertain Facing re-starts t-SNE after obtaining face embeddings of the audience’s face image in real time. While the real audience face is being mixed, merged with the fake faces in 3D space, Uncertain Facing also shows the top nine similar faces on the UI. Here, it tries to imply an aspect that machine learning could be misused in an unintended way as FaceNet does not distinguish between real and fake faces.

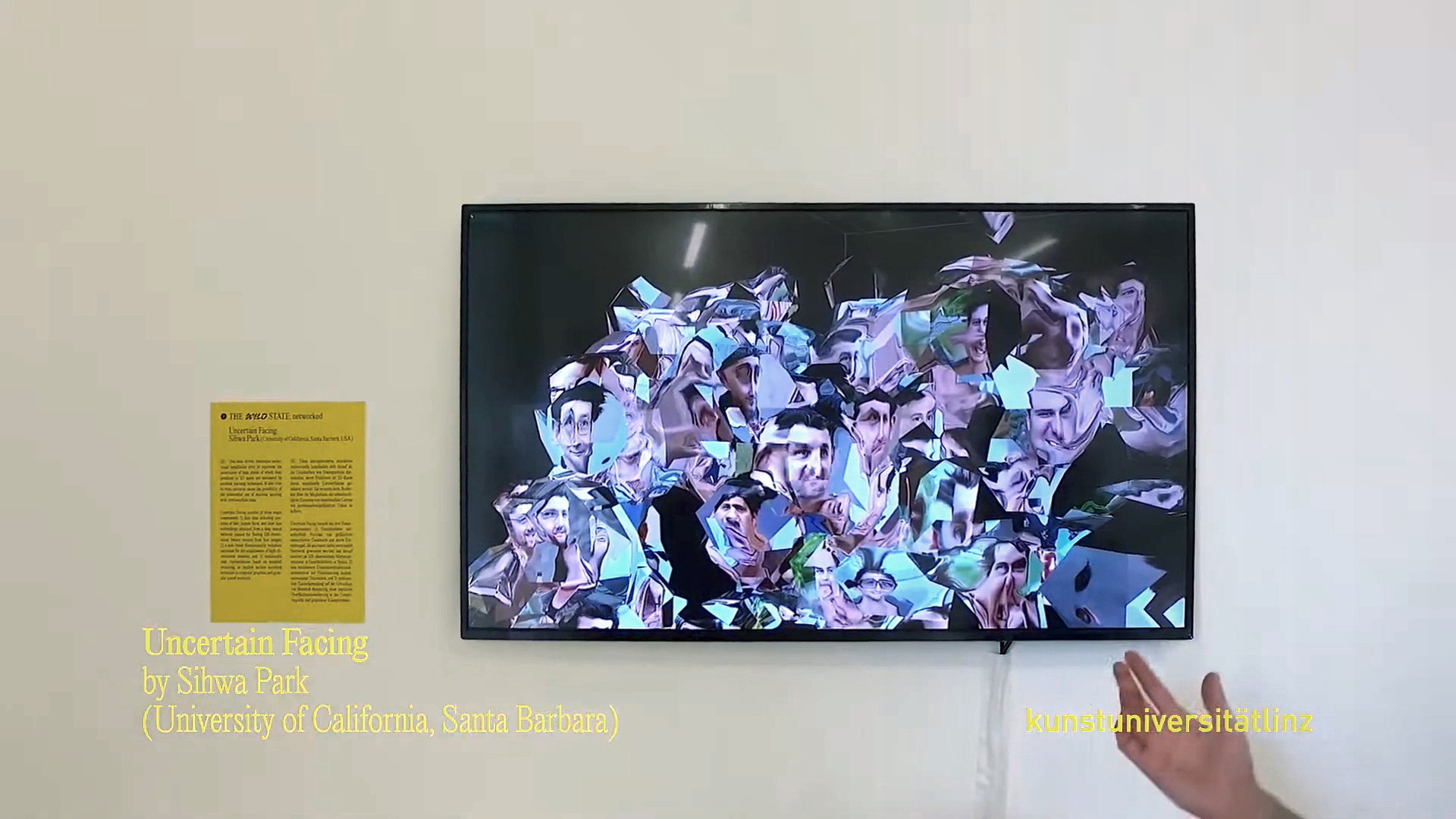

Interactive Installation at Ars Electronica Festival 2020

Group exhibition “The Wild State”, Kunstuniversität Linz 2020, Photo: Su-Mara Kainz

Related Exhibitions

Nov 2021, “Uncertain Facing” at the Special Exhibition of AIIF 2021 Advanced Imaging & Artificial Intelligence (Online, invited)

Dec 2020, “Uncertain Facing” at the NeurIPS 2020 Workshop on Machine Learning for Creativity and Design (Online; displayed on its AI Art Gallery)

Dec 2020, “Uncertain Facing” at the SIGGRAPH Asia 2020 Art Gallery (Virtual), Daegu, Korea

Oct 2020, “Uncertain Facing” at the IEEE VIS Arts Program (VISAP) Exhibition (Online), Salt Lake City, Utah, USA [Presentation]

Sep 2020, “Uncertain Facing” at The Wilde State: Networked exhibition, Ars Electronica Festival 2020, Linz, Austria

Jun 2020, “Uncertain Facing” at the MAT 2020 End of Year Show: Bricolage (Online), UCSB, Santa Barbara, USA